Introduction to Data Warehousing

├─ Concept of Data Warehouse

├─ DBMS versus Data Warehouse

├─ Data Marts

├─ Metadata

└─ Multidimensional Data Model

├─ Multidimensional Database

│ ├─ Data Warehouse Measures

│ │ ├─ Their Categorization

│ │ └─ Computation

│ └─ Multi-dimensional Database Hierarchies

Data Warehouse Introduction

- Imagine you run a big retail chain like Amazon. You have data coming in from multiple places;

website orders, store purchases, customer service interactions, and even supplier data. Now, if

this data is scattered across different systems, how will you analyze trends, predict sales, or

make decisions?

- That’s where a Data Warehouse (DW) comes in. It acts like a central storage hub, where data from

different sources is collected, cleaned, and structured for better reporting and analysis.

- A Data Warehouse (DW) is a centralized storage system that integrates data from multiple

sources, making it available for reporting, analysis, and decision-making. Unlike regular

databases, a data warehouse is optimized for query performance, historical data storage, and

business intelligence.

- Examples of Data Warehouses

- Amazon Redshift

- Google BigQuery

- Snowflake

- Microsoft Azure Synapse

Key Defining Features of Data Warehouse

- According to Bill Inmon, known as the "Father of Data Warehousing," a data warehouse is

"a subject-oriented, integrated, non-volatile, and time-variant collection of data

in support of management decision-making."

Let's break this definition down into simple terms with examples.

Subject-Oriented Data

- Unlike a regular database that stores everything (like customer details, product info,

orders, payments), a data warehouse is focused on a specific subject; such

as sales, customer behavior, or financial trends.

- It organizes data based on key business areas instead of application

processes.

- Example: A retail company might have a data warehouse specifically for

analyzing sales trends, rather than storing day-to-day transactions like a

traditional database.

Integrated Data

- Data comes from multiple sources (databases, logs, APIs, Excel sheets) and

is transformed into a consistent format before being stored.

- This ensures that data is standardized and can be analyzed without inconsistencies.

- Example: If an airline collects data from ticket bookings, customer

feedback, and flight schedules, a data warehouse integrates this information so

that it can be used for overall performance analysis.

Non-Volatile Data

- Once data is stored in a data warehouse, it does not change (no frequent

updates, deletions, or modifications like in a transactional database).

- This helps in maintaining a historical record for accurate trend analysis.

- Example: A bank's data warehouse stores past financial

transactions so that they can be analyzed over time for fraud detection and

financial planning.

Time-Variant Data

- Data in a warehouse is stored with a time perspective, meaning it keeps

historical records for trend analysis.

- Unlike a database that shows only the current state of data, a data

warehouse allows businesses to compare past and present data.

- Example: An e-commerce company can analyze how sales have changed

over the last five years to predict future trends.

How a Data Warehouse Works

Step 1: Data Sources (Input)

- Data comes from multiple sources like:

- Sales databases

- Customer interactions

- Social media insights

- Supplier information

Step 2: Data Warehouse (Processing)

- This is where the magic happens! The data is organized, processed, and stored using various

tools and software.

Step 3: Output (Usage of Data)

- Once stored, business use the data for:

- Reporting (e.g., daily sales reports)

- Analysis (e.g., why are sales dropping in a certain region?)

- Data Mining (e.g., finding hidden patterns in customer behavior)

Real-Life Example

- 📍 Let’s say McDonald’s wants to track its daily sales across thousands of outlets

worldwide.

- Without a data warehouse, they would have to check each outlet’s database separately, which

would be a nightmare! Instead, they collect all sales data, process it in a central system,

and

analyze it to make business decisions.

- For example, they can use this data to:

- Identify which menu items sell the most in different regions

- Forecast demand for ingredients to avoid shortages

- Optimize pricing based on sales trends

The Role of ETL in a Data Warehouse

- Before the data gets stored, it needs to be processed properly. That’s where ETL (Extract,

Transform, Load) comes in.

- Extract – Data is pulled from different sources (e.g., POS systems, customer

apps).

- Transform – Data is cleaned, formatted, and structured. (E.g., dates might be

stored

differently in different systems, so they need to be standardized.)

- Load – The processed data is stored in the data warehouse, ready for analysis.

Why Do We Even Need a Data Warehouse?

-

Imagine you own a large retail business with stores in multiple cities. Every day, your stores generate huge amounts of data—sales transactions, customer purchases, stock levels, and more. All this data is stored in traditional databases, known as Database Management Systems or DBMS. These systems are designed to help with storing, updating, and retrieving current data efficiently, mainly for daily operations and transactions.

-

But here’s the problem. What if you want to analyze your data to make better business decisions? For example, you might want to know:

- What was the total sales revenue last year?

- Which city has the highest demand for electronics?

- How do this year’s sales compare to last year’s?

These questions aren’t about day-to-day operations; they’re about trends, summaries, and comparisons over time. That’s what we call analytical processing, and it's a different task altogether from just managing current transactions.

-

A regular DBMS isn’t built for these kinds of tasks. It excels at handling real-time transactional data but struggles with large-scale, historical analysis. That’s where the concept of a data warehouse comes in—a specialized system designed to store and organize massive amounts of historical data from various sources. Unlike DBMS, a data warehouse is optimized for complex queries, reporting, and data analysis, using what’s known as Online Analytical Processing (OLAP), instead of the transaction-focused Online Transaction Processing (OLTP).

DBMS vs. Data Warehouse – What’s the Difference?

- We know that a DBMS (like MySQL, PostgreSQL) is mainly used for storing and managing current

data, such as recording sales transactions, customer details, or order updates. It’s

optimized for quick inserts, updates, and deletions. However, when you try to analyze

historical data (like yearly sales trends), a DBMS becomes slow and inefficient.

- A Data Warehouse, on the other hand, is designed specifically for analyzing large volumes of

historical data. It doesn’t store daily transactions but instead consolidates and organizes

data from multiple sources to help businesses make better decisions.

- Think of it like this:

- A DBMS is like a cash register; it records every transaction in real time.

- A Data Warehouse is like a financial report; it gives you an overview of your

business performance over time.

Attribute

DBMS

Data Warehouse

Purpose

Manages day-to-day transactions

Stores historical data for analysis

Data Type

Current, real-time data

Historical, processed data

Usage

CRUD operations (Create, Read, Update, Delete)

Reporting, business intelligence

Processing Speed

Fast for transactions

Optimized for queries and analysis

Users

Application users (e.g., customers, employees)

MySQL, PostgreSQL, MongoDB

Business analysts, data scientists

Examples

MySQL, PostgreSQL, Oracle DB, SQL Server

Snowflake, Redshift, BigQuery

ETL Process?

No need for ETL

Uses ETL (Extract, Transform, Load)

Data Structure

Row-oriented

Column-oriented

Data Updates

Frequent updates, real-time changes

Read-heavy, periodic updates

- Amazon Redshift

- Google BigQuery

- Snowflake

- Microsoft Azure Synapse

Key Defining Features of Data Warehouse

- According to Bill Inmon, known as the "Father of Data Warehousing," a data warehouse is "a subject-oriented, integrated, non-volatile, and time-variant collection of data in support of management decision-making."

Let's break this definition down into simple terms with examples.

Subject-Oriented Data

- Unlike a regular database that stores everything (like customer details, product info, orders, payments), a data warehouse is focused on a specific subject; such as sales, customer behavior, or financial trends.

- It organizes data based on key business areas instead of application processes.

- Example: A retail company might have a data warehouse specifically for analyzing sales trends, rather than storing day-to-day transactions like a traditional database.

Integrated Data

- Data comes from multiple sources (databases, logs, APIs, Excel sheets) and is transformed into a consistent format before being stored.

- This ensures that data is standardized and can be analyzed without inconsistencies.

- Example: If an airline collects data from ticket bookings, customer feedback, and flight schedules, a data warehouse integrates this information so that it can be used for overall performance analysis.

Non-Volatile Data

- Once data is stored in a data warehouse, it does not change (no frequent updates, deletions, or modifications like in a transactional database).

- This helps in maintaining a historical record for accurate trend analysis.

- Example: A bank's data warehouse stores past financial transactions so that they can be analyzed over time for fraud detection and financial planning.

Time-Variant Data

- Data in a warehouse is stored with a time perspective, meaning it keeps historical records for trend analysis.

- Unlike a database that shows only the current state of data, a data warehouse allows businesses to compare past and present data.

- Example: An e-commerce company can analyze how sales have changed over the last five years to predict future trends.

How a Data Warehouse Works

Step 1: Data Sources (Input)

- Data comes from multiple sources like:

- Sales databases

- Customer interactions

- Social media insights

- Supplier information

Step 2: Data Warehouse (Processing)

- This is where the magic happens! The data is organized, processed, and stored using various tools and software.

Step 3: Output (Usage of Data)

- Once stored, business use the data for:

- Reporting (e.g., daily sales reports)

- Analysis (e.g., why are sales dropping in a certain region?)

- Data Mining (e.g., finding hidden patterns in customer behavior)

Real-Life Example

- 📍 Let’s say McDonald’s wants to track its daily sales across thousands of outlets worldwide.

- Without a data warehouse, they would have to check each outlet’s database separately, which would be a nightmare! Instead, they collect all sales data, process it in a central system, and analyze it to make business decisions.

- For example, they can use this data to:

- Identify which menu items sell the most in different regions

- Forecast demand for ingredients to avoid shortages

- Optimize pricing based on sales trends

The Role of ETL in a Data Warehouse

- Before the data gets stored, it needs to be processed properly. That’s where ETL (Extract,

Transform, Load) comes in.

- Extract – Data is pulled from different sources (e.g., POS systems, customer apps).

- Transform – Data is cleaned, formatted, and structured. (E.g., dates might be stored differently in different systems, so they need to be standardized.)

- Load – The processed data is stored in the data warehouse, ready for analysis.

Why Do We Even Need a Data Warehouse?

- Imagine you own a large retail business with stores in multiple cities. Every day, your stores generate huge amounts of data—sales transactions, customer purchases, stock levels, and more. All this data is stored in traditional databases, known as Database Management Systems or DBMS. These systems are designed to help with storing, updating, and retrieving current data efficiently, mainly for daily operations and transactions.

-

But here’s the problem. What if you want to analyze your data to make better business decisions? For example, you might want to know:

- What was the total sales revenue last year?

- Which city has the highest demand for electronics?

- How do this year’s sales compare to last year’s?

- A regular DBMS isn’t built for these kinds of tasks. It excels at handling real-time transactional data but struggles with large-scale, historical analysis. That’s where the concept of a data warehouse comes in—a specialized system designed to store and organize massive amounts of historical data from various sources. Unlike DBMS, a data warehouse is optimized for complex queries, reporting, and data analysis, using what’s known as Online Analytical Processing (OLAP), instead of the transaction-focused Online Transaction Processing (OLTP).

DBMS vs. Data Warehouse – What’s the Difference?

- We know that a DBMS (like MySQL, PostgreSQL) is mainly used for storing and managing current data, such as recording sales transactions, customer details, or order updates. It’s optimized for quick inserts, updates, and deletions. However, when you try to analyze historical data (like yearly sales trends), a DBMS becomes slow and inefficient.

- A Data Warehouse, on the other hand, is designed specifically for analyzing large volumes of historical data. It doesn’t store daily transactions but instead consolidates and organizes data from multiple sources to help businesses make better decisions.

- Think of it like this:

- A DBMS is like a cash register; it records every transaction in real time.

- A Data Warehouse is like a financial report; it gives you an overview of your business performance over time.

| Attribute | DBMS | Data Warehouse |

|---|---|---|

| Purpose | Manages day-to-day transactions | Stores historical data for analysis |

| Data Type | Current, real-time data | Historical, processed data |

| Usage | CRUD operations (Create, Read, Update, Delete) | Reporting, business intelligence |

| Processing Speed | Fast for transactions | Optimized for queries and analysis |

| Users | Application users (e.g., customers, employees) MySQL, PostgreSQL, MongoDB |

Business analysts, data scientists |

| Examples | MySQL, PostgreSQL, Oracle DB, SQL Server | Snowflake, Redshift, BigQuery |

| ETL Process? | No need for ETL | Uses ETL (Extract, Transform, Load) |

| Data Structure | Row-oriented | Column-oriented |

| Data Updates | Frequent updates, real-time changes | Read-heavy, periodic updates |

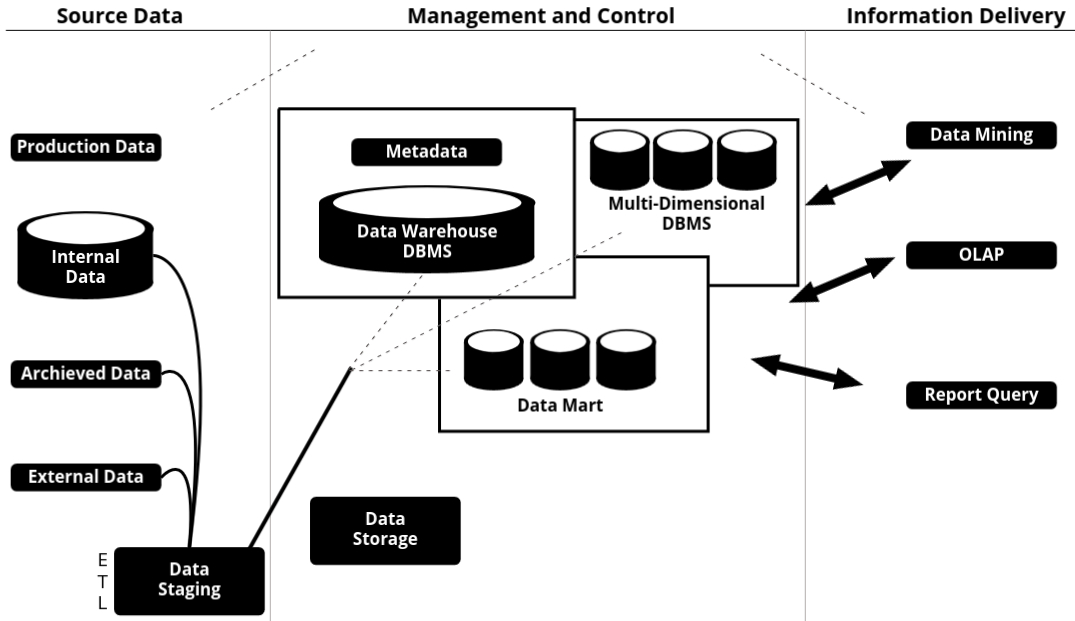

Data Warehouse Components

A data warehouse is made up of several components that work together to collect, store, and analyze data. It follows a structured flow that ensures data is properly managed from its source to the final reports. The data warehouse process can be divided into three main stages: Source Data, Management and Control, and Information Delivery.

1. Source Data (Where the Data Comes From)

The first stage of a data warehouse involves gathering data from different sources. These can be internal company systems, historical records, or even external third-party data.

- Production Data: This includes operational databases like customer transactions, sales records, and employee details that come from daily business activities.

- Internal Data: Data collected within the organization, such as financial reports, HR data, or customer feedback.

- Archived Data: Historical records that are no longer actively used in daily operations but are stored for long-term analysis.

- External Data: Information from sources outside the organization, such as market trends, competitor analysis, or third-party data providers.

- Data Staging: Before the data moves to the warehouse, it is temporarily stored and processed in a staging area. Here, it undergoes cleaning, transformation, and integration to ensure consistency.

2. Management and Control (Where the Data is Processed and Stored)

Once data is collected, it needs to be managed and stored efficiently. This stage ensures that data is well-organized and ready for analysis.

- Metadata: This is "data about data." It keeps track of where the data came from, how it was transformed, and how it is stored. Metadata helps users understand the structure and meaning of the data.

- Data Warehouse DBMS: This is the core database where the processed data is stored. It is optimized for fast retrieval and analysis.

- Data Mart: A smaller, specialized section of the data warehouse that focuses on a specific department, such as sales or finance. This allows for faster, more relevant queries.

- Multidimensional DBMS: Unlike traditional databases that store data in tables, multidimensional databases allow data to be organized in multiple dimensions (e.g., sales by region, time, and product). This enables complex analysis.

- Data Storage: This includes the actual physical or cloud-based storage where all the processed data is kept for future access.

3. Information Delivery (Where the Data is Used for Insights)

The final stage is where the data is used for decision-making. The processed and stored data is accessed through various tools to generate meaningful insights.

- Data Mining: The process of discovering patterns, trends, and relationships in large datasets. For example, a retailer might use data mining to find which products are often bought together.

- OLAP (Online Analytical Processing): A technique that allows users to perform complex queries and view data from multiple perspectives. For example, a company can analyze sales trends across different regions and time periods.

- Report Query: Standard reports and ad-hoc queries that help businesses generate dashboards and summaries. These reports help managers and executives make data-driven decisions.

A data warehouse is not just about storing data; it follows a structured process from data collection to data processing and finally to data analysis. Each component plays a key role in ensuring that businesses can make informed decisions based on accurate and well-organized data.

Metadata and Its Types

Metadata is data about data. It provides detailed information about data’s source, structure, transformations, and usage, ensuring efficient data management in a data warehouse. Metadata plays a crucial role in organizing, processing, and retrieving data for analysis.

Why is Metadata Important?

- Ensures data consistency and accuracy.

- Helps in tracking data lineage (where data comes from and how it changes).

- Improves data governance and security.

- Supports ETL (Extraction, Transformation, and Loading) processes.

In a data warehouse, metadata is categorized into three main types:

1. Operational Metadata

Operational metadata tracks the workflow and execution details of the data warehouse, ensuring smooth operation and troubleshooting.

- Records when and how data is loaded (ETL process logs).

- Tracks data refresh schedules, failures, and processing times.

- Helps in monitoring system performance and identifying bottlenecks.

Example: In an e-commerce data warehouse, operational metadata might include:

- Last ETL execution time – March 5, 2025, at 02:00 AM.

- Data refresh interval – Every 24 hours.

- Failed transactions log – 50 records failed due to missing customer IDs.

2. Extraction and Transformation Metadata

This type of metadata focuses on how data is extracted, cleaned, transformed, and loaded (ETL process details).

- Documents data sources (ERP, CRM, databases, logs, external APIs).

- Tracks data transformation rules (format changes, filtering, aggregations).

- Ensures data mapping and consistency between different systems.

Example: In a banking data warehouse, extraction and transformation metadata might include:

- Data source: Customer transactions database.

- Transformation applied: Convert transaction timestamps from UTC to IST.

- Data mapping: Cust_ID in CRM maps to Customer_ID in the warehouse.

3. End-User Metadata

End-user metadata provides business users and analysts with meaningful descriptions of data, making it easier to interpret and use.

- Defines business terms and KPIs (Key Performance Indicators).

- Explains data relationships and usage in reports and dashboards.

- Allows users to search and retrieve relevant data easily.

Example: In a retail business intelligence system, end-user metadata might include:

- Total Revenue – The sum of all completed sales transactions.

- Customer Churn Rate – The percentage of customers who stop purchasing after three months.

- Sales by Region – A report showing total sales grouped by geographic region.

Without metadata, navigating a data warehouse would be like searching for a book in a library without a catalog—it would be difficult and inefficient.

Data Mart

A Data Mart is a smaller, specialized subset of a data warehouse that focuses on a specific business function or department. It allows for faster access to relevant data, improving decision-making for targeted users.

Why Create a Data Mart?

Organizations create data marts for several reasons, including:

- Faster Data Access: Data marts store only relevant data, making queries and reports faster.

- Improved Performance: Reduces the load on the main data warehouse by handling department-specific queries.

- Better Security: Limits access to sensitive information by restricting data to relevant departments.

- Cost-Effective: Requires less storage and processing power compared to a full data warehouse.

- Departmental Autonomy: Different teams can manage and optimize their own data without affecting the central system.

Types of Data Marts

Data marts are categorized based on how they are created and maintained. The two main types are:

1. Dependent Data Mart

A Dependent Data Mart is created directly from an existing enterprise data warehouse (EDW). It acts as a smaller, department-specific extension of the central data warehouse.

- Data flows from the main data warehouse to the data mart.

- Ensures data consistency across different departments.

- Managed and controlled by the central IT team.

Example: A retail company has a main data warehouse storing all business data. A dependent data mart is created for the marketing department to analyze customer purchase patterns.

2. Independent Data Mart

An Independent Data Mart is a standalone system that does not rely on a central data warehouse. It is designed to meet the needs of a specific business unit.

- Data is collected directly from operational databases, external sources, or other applications.

- Does not require a centralized data warehouse.

- Faster and easier to implement but may lead to data inconsistencies across departments.

Example: A healthcare organization sets up an independent data mart for patient records, separate from the main hospital management system.

By using data marts, businesses can optimize data management, improve reporting efficiency, and make informed decisions faster.

Multi-Dimensional Data Model

The Multi-Dimensional Data Model is a way of organizing and storing data in a data warehouse that makes it easier to analyze from different perspectives. Instead of storing data in traditional tables like a relational database, this model arranges data in multiple dimensions, making it more efficient for reporting and business analysis.

What Data is Stored in a Data Warehouse?

Data in a data warehouse is stored in a structured format that allows for easy analysis across different categories, known as dimensions. Each piece of data is associated with multiple dimensions to help understand trends, patterns, and insights.

- In simpler words: Data is categorized by different subjects, and each subject is analyzed across various dimensions.

- Example: Imagine you are analyzing sales in a retail store. If your subject

(fact)

is "Quantity Sold," then you can analyze it using different dimensions such as:

- Item Type: What type of item was sold? (e.g., Electronics, Clothing, Groceries)

- Location: Where was the item sold? (e.g., New York, Mumbai, London)

- Time Period: When was it sold? (e.g., January 2024, Q1 2024, Last 7 Days)

- This means that the data warehouse stores information about items sold based on their type, the geographical location of the sale, and the time period in which they were sold.

How Does This Help?

By structuring data in a multi-dimensional model, businesses can quickly answer questions like:

- Which product category had the highest sales last month?

- How do sales vary across different regions?

- What were the most popular items sold during the holiday season?

This approach makes it easier to generate reports, perform data mining, and conduct business analysis efficiently.

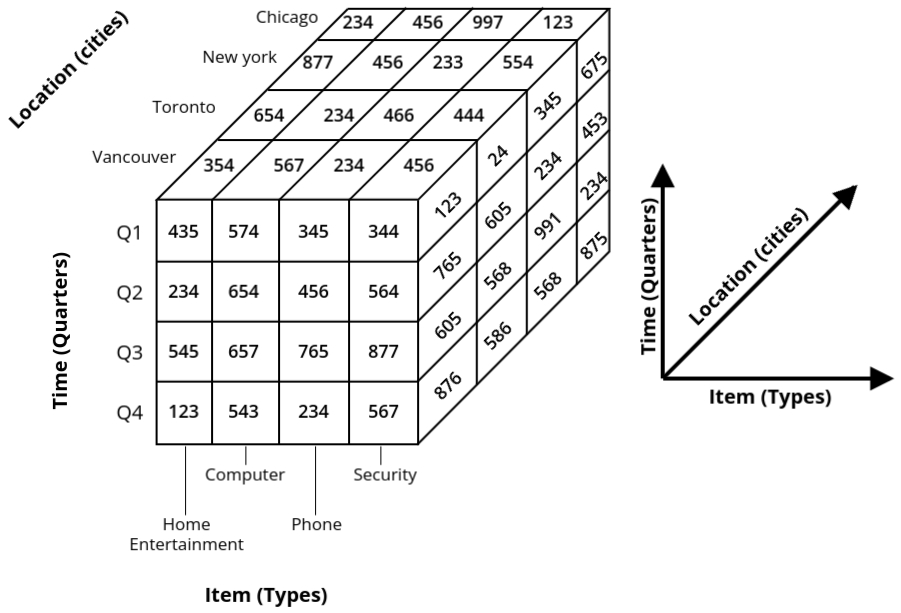

How is Data Represented in a Data Warehouse?

In a data warehouse, data is often represented in the form of a Data Cube. A data cube allows us to group or combine data into a structured, multi-dimensional format, making it easier to analyze from different perspectives.

What is a Data Cube?

- A Data Cube organizes data into multidimensional matrices, where each axis (dimension) represents a specific category of data.

- It helps visualize complex data relationships in an easy-to-understand format.

Understanding the Multi-Dimensional Data Cube

Multi-dimensional data can be represented using a data cube, as shown below:

This figure illustrates a 3-Dimensional Data Model, where data is categorized based on three different dimensions:

- X-Dimension: Item Type (e.g., Electronics, Clothing, Groceries)

- Y-Dimension: Time / Period (e.g., Q1 2024, Last Month, Holiday Season)

- Z-Dimension: Location (e.g., New York, Mumbai, London)

Each individual cell in this cube represents the number of items sold of a particular type (X), in a specific location (Z), during a given time period (Y).

Can We Add More Dimensions?

- What if we also want to include the store where the item was sold?

- We can add another dimension to include store details, such as the store ID or branch location.

- However, adding more dimensions makes the data cube more complex and difficult to visualize.

Since a data cube can include multiple dimensions beyond just three, it is often referred to as an N-Dimensional Data Model, where "N" represents the number of dimensions used in data analysis.

Why Use a Data Cube?

- Allows for faster data retrieval for analysis.

- Makes it easy to analyze trends, patterns, and relationships between different business factors.

- Supports OLAP (Online Analytical Processing) operations, like slicing, dicing, and drilling down into data.

Using a data cube helps businesses perform multi-dimensional analysis efficiently, making it a crucial part of data warehousing.

Multi-Dimensional Data Model

The Multi-Dimensional Data Model (MDDM) was developed specifically for implementing data warehouses and data marts. It provides both a structured way to store data and a powerful mechanism for business analysis.

How Does the Multi-Dimensional Data Model Work?

- This model stores data in the form of a Data Cube, which organizes data into multiple dimensions.

- Most data warehousing solutions use either two-dimensional or three-dimensional cubes to analyze data.

- A data cube allows users to view and analyze data from multiple dimensions simultaneously.

Understanding Dimensions in Data Warehousing

In a data warehouse, dimensions refer to entities based on which an organization wants to maintain records. These dimensions help in categorizing and analyzing data efficiently.

- Example: Consider a store that tracks its sales data. The main dimensions could

be:

- Time: Monthly sales, Quarterly reports, Annual performance.

- Item Type: Different product categories (Electronics, Groceries, Clothing).

- Location: Sales across different branches and cities.

- These dimensions allow businesses to analyze data from different perspectives and make better decisions.

Why Use a Multi-Dimensional Database?

- It helps businesses quickly and accurately answer data-related queries.

- It supports efficient storage and retrieval of large amounts of structured data.

- It enables users to perform complex data analysis using multiple perspectives.

Role of OLAP in Multi-Dimensional Data Models

Online Analytical Processing (OLAP) is a key component of multi-dimensional data models. OLAP tools in data warehousing help users analyze data from multiple angles and dimensions.

- OLAP enables users to slice, dice, drill-down, and roll-up data for deeper insights.

- It supports fast querying and analysis of large datasets, making decision-making more efficient.

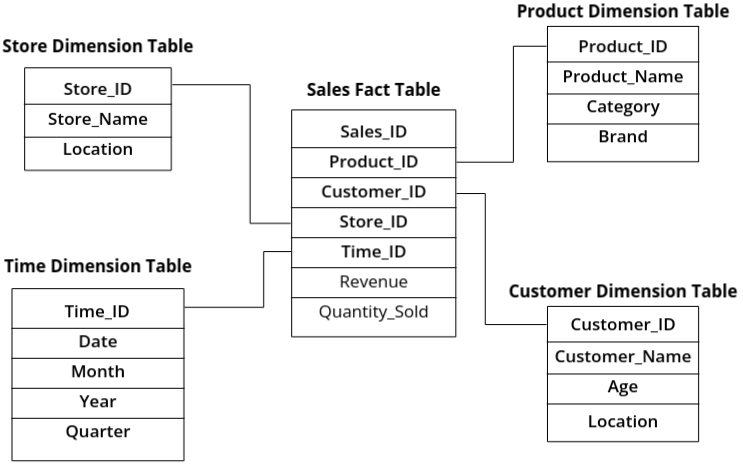

Components of Multi-Dimensional Data Model (MDDM)

The Multi-Dimensional Data Model (MDDM) is built on two key components: Dimensions and Facts. These components help structure data for analysis in a data warehouse.

Key Components of MDDM

- Dimensions: These provide descriptive context for analyzing data. Dimensions

categorize and organize business information.

- For example, in a sales database, common dimensions include Product, Time, Store Location.

- Facts: These are the measurable business values stored in the fact table. They

represent performance metrics such as sales revenue or quantity sold.

- For example, a sales fact table may include Sales Amount, Quantity Sold, Discount Applied.

Types of Tables in MDDM

There are two main types of tables in a Multi-Dimensional Data Model:

1. Dimension Tables

A dimension table stores descriptive attributes related to a subject. Each dimension table has a Primary Key (PK) that uniquely identifies each record.

For example, in a college placement system, we have the following four dimension tables:

2. Fact Table

The Fact Table stores business metrics and contains only Foreign Keys

(FKs) that reference the primary keys from the dimension tables.

A fact table has two types of columns: one column of foreign keys (pointing to the dimension

tables) and other of numeric values.

For the college placement system, the Placement Fact Table will contain:

How Dimension & Fact Tables Work Together

The Fact Table links different Dimension Tables to help analyze placement data. Some example queries include:

- How many students were placed in a particular company?

- Query the Fact Table and join it with the Company Dimension Table using Company_ID.

- Which teacher’s students had the highest selection rate?

- Query the Fact Table and join it with the Teacher Dimension Table using Teacher_ID.

- How many students were placed by a particular TPO?

- Query the Fact Table and join it with the TPO Dimension Table using TPO_ID.

Schemas for Multi-Dimensional Data

In data warehousing, schemas define how data is structured in a multi-dimensional model. The well-known schemas are:

- Star Schema: A single Fact Table with multiple Dimension Tables directly linked to it.

- Snowflake Schema: A single Fact Table with Dimension Tables organized hierarchically through normalization.

- Fact Constellation Schema: Multiple Fact Tables sharing common Dimension Tables.

Each schema has a Fact Table that stores all the facts about the subject or measure. Each fact is associated with multiple dimension keys that link it to Dimension Tables.

Star Schema

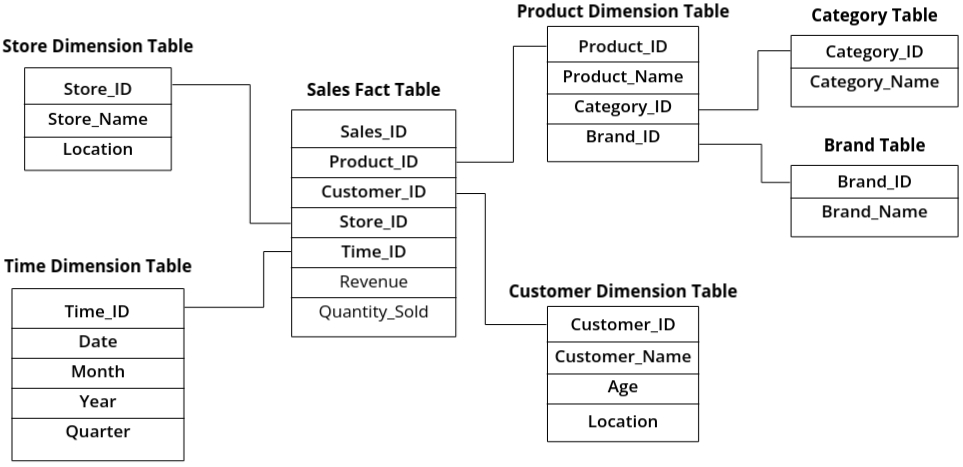

- In the Star Schema, a central Fact Table is surrounded by multiple Dimension Tables. Each Dimension Table contains descriptive attributes related to a particular aspect of the fact.

The Star Schema is the basic form of a dimensional data model, in which data are organized into facts (quantitative data) and dimensions (qualitative attributes). This schema is widely used in data warehouses for its simplicity and ease of use.

- The central Fact Table is typically large and contains numerical data. It does not have redundancy, meaning that each piece of information is stored only once.

- Each row (or tuple) in the Fact Table contains a foreign key that links it to the corresponding records in the Dimension Tables, which describe the details of the related dimension.

Example Scenario:

Consider a sales data warehouse. The Fact Table will store information about sales transactions, such as sales revenue, quantity sold, and the time of sale. The Dimension Tables will store descriptive attributes that provide context to these transactions, such as:

Problem: Redundancy

In the Star Schema, the Dimension Tables are typically denormalized, which means that some level of data redundancy can occur. For example:

- In the Product Dimension Table, every time a sale of a specific product occurs, the Category and Brand information is repeated for each sales transaction in the Fact Table.

- Similarly, for the Customer and Store dimensions, attributes such as Location are repeated for every sale made in that store or by that customer.

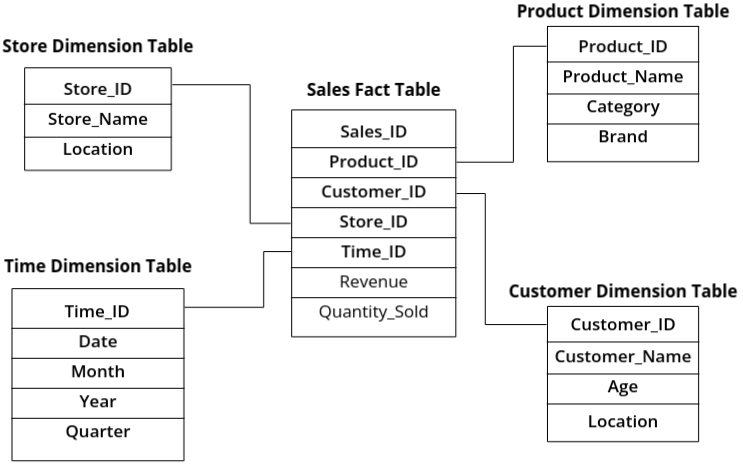

Solution to Redundancy in Snowflake Schema:

- To reduce redundancy, we can implement the Snowflake Schema, where the Dimension Tables are normalized into multiple related tables. Instead of storing all descriptive attributes in a single table, we break them down into smaller tables to eliminate duplicate data.

Advantages of Star Schema:

- Simplicity: The structure of the Star Schema is intuitive and easy to understand. The Fact Table is at the center, and the Dimension Tables are directly connected, creating a clear and straightforward design.

- Efficient Querying: Because the schema is denormalized, there are fewer joins between tables, which often results in faster query performance.

- Optimized for OLAP: The Star Schema is particularly well-suited for Online Analytical Processing (OLAP), as it allows for fast and easy retrieval of aggregated data.

Snowflake Schema

The Snowflake Schema is an extension of the Star Schema, but it addresses the redundancy issue by normalizing the Dimension Tables. In this schema, the Dimension Tables are broken down into multiple related tables, forming a snowflake-like structure. This reduces redundancy and saves storage space by avoiding the repetition of data.

How Snowflake Solves Redundancy:

- In the Star Schema, each Dimension Table contains repetitive information for each row in the Fact Table. For example, product-related details like Category and Brand are repeated for each sale of the same product. This can lead to a lot of redundant data and increases storage requirements.

- In the Snowflake Schema, the Dimension Tables are split into smaller tables that are more normalized. The repeated data is moved to separate tables, and relationships between these tables are established using foreign keys.

Example Scenario:

Let’s take the same sales data warehouse example we used for the Star Schema:

- Star Schema Structure (before normalization):

In this structure, product details like Category and Brand are repeated multiple times in the Product Dimension Table.

- Snowflake Schema Structure (after normalization): Now, we normalize the Product Dimension Table to avoid redundancy. We create separate tables for Category and Brand, and link them using foreign keys.

Key Points of Snowflake Schema:

- Normalization: Unlike the Star Schema, the Snowflake Schema normalizes the Dimension Tables, breaking them down into smaller related tables.

- Reduction of Redundancy: By separating out repeating data (e.g., Category and Brand), redundancy is reduced, making the schema more efficient in terms of storage.

- Increased Complexity: While Snowflake Schema reduces redundancy, it introduces more complexity in terms of table structure and the relationships between tables. Queries may also become more complex since multiple tables need to be joined.

Advantages of Snowflake Schema:

- Reduced Redundancy: By normalizing the Dimension Tables, repeated information (such as Category or Brand) is stored only once, saving space.

- Improved Data Integrity: With less duplication of data, the chances of inconsistency or errors are minimized.

- Efficient Storage: Reducing redundancy means that less storage space is required, especially for large data warehouses.

Disadvantages of Snowflake Schema:

- Complex Queries: Since the data is split across multiple tables, queries may become more complex and require multiple joins.

- Slower Query Performance: More joins can result in slower query performance, especially when dealing with large datasets.

Difference between Star Schema and Snowflake Schema

- Normalization: Star schema uses a denormalized structure, while the Snowflake schema uses a partially normalized structure.

- Query Performance: Star schema typically performs better for querying due to fewer joins, whereas Snowflake schema can be slower because it involves more joins.

- Data Redundancy: Star schema has high data redundancy, whereas Snowflake schema has low data redundancy.

- Disk Space: Star schema tends to occupy more disk space due to data duplication, while Snowflake schema requires less disk space.

- Ease of Use: Star schema is easier to understand and implement, making it more user-friendly for non-technical users. Snowflake schema requires more effort to design and maintain.

- Storage Efficiency: Snowflake schema is more storage-efficient due to its normalized structure, making it a better choice for large datasets and complex data environments.

- Complexity: Star schema is simpler, while Snowflake schema is more complex due to its multiple levels of dimension tables and normalization.

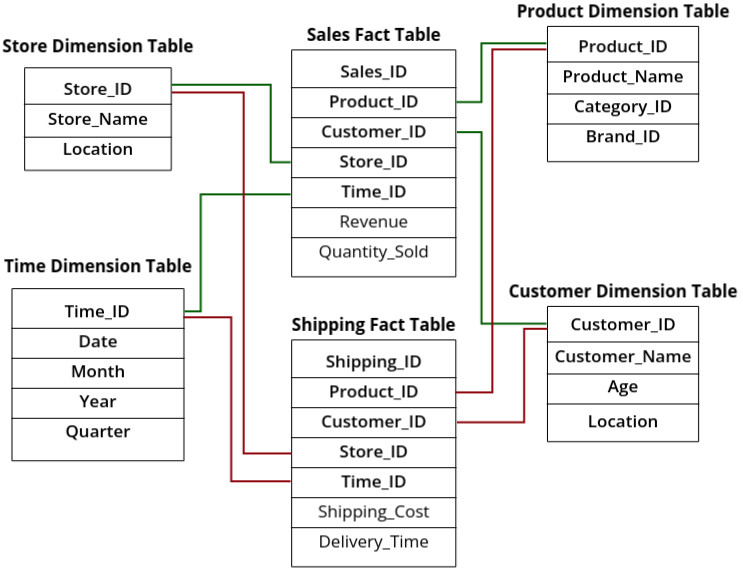

Fact Constellation Schema

- The Fact Constellation Schema is an advanced multi-dimensional data model where multiple Fact Tables share common Dimension Tables. This schema is also known as a Galaxy Schema because it represents a complex data warehouse structure with multiple facts (measures) connected to shared dimensions.

- In Star Schema and Snowflake Schema, we have a single Fact Table with multiple Dimension Tables.

However, in large-scale business scenarios, organizations often need to analyze different types

of facts (e.g., sales, shipping, inventory) that are related to the same dimensions.

To handle this, Fact Constellation Schema allows multiple Fact Tables to share the same Dimension Tables. This helps in handling complex queries efficiently and enables a multi-fact analysis.

Example Scenario

Let’s take an e-commerce company that wants to analyze sales and shipping data. Instead of using a single Fact Table, we create two:

- Sales Fact Table → Stores sales-related data.

- Shipping Fact Table → Stores shipping-related data.

Key Points of Fact Constellation Schema:

- Multiple Fact Tables: Unlike Star and Snowflake schemas, which use a single Fact Table, Fact Constellation Schema supports multiple Fact Tables.

- Shared Dimension Tables: The same Dimension Tables are used across multiple Fact Tables, allowing for more efficient storage.

- Complex Data Relationships: This schema is suitable for large data warehouses where multiple business processes are analyzed.

Advantages of Fact Constellation Schema:

- Better Representation of Real-World Data: Organizations often deal with multiple facts, and this schema captures them effectively.

- Efficient for Multi-Fact Analysis: Allows analyzing multiple business processes (e.g., sales and shipping) together.

- Optimized for Large Data Warehouses: Suitable for businesses with large and complex datasets.

Disadvantages of Fact Constellation Schema:

- Complex Query Processing: Since multiple Fact Tables and Dimension Tables are involved, queries can become complex.

- Increased Maintenance Effort: Managing multiple Fact Tables requires extra maintenance and careful database design.

Data Warehouse Measures

- A measure is basically a number that helps track how well a business is doing. It tells us about performance or behavior based on different factors (dimensions). The actual values we use to measure things are called variables.

- A measure depends on a combination of different elements (members) from the dimension and is stored in facts.

- Usually, measures are numbers that you can add up, like total sales or revenue. And you’re not stuck with just one measure—you can have multiple ones in a fact table. For example, if you’re tracking international purchases, you might have separate measures for different currencies.

- Let’s say you’re working with sales data in the retail industry. Your fact table might include measures like cost, list price, and average sale price to give you a complete picture of sales performance.

Types of Measures

- Additive: Measures that can be summed across all dimensions.

- Semi-Additive: Measures that can be summed across some dimensions but not all.

- Non-Additive: Measures that cannot be summed across any dimension.

Additive Measures

- These measures can be aggregated (summed) across all dimensions without any restrictions.

- For example, Total Sales Revenue can be summed across time, location, and product categories.

- Other examples include Total Quantity Sold and Total Profit.

Semi-Additive Measures

- These measures can be aggregated across some dimensions but not across all.

- For example, Account Balance can be summed across different branches of a bank but not across time.

- Other examples include Inventory Level and Headcount (total employees at a given time).

Non-Additive Measures

- These measures cannot be summed across any dimension.

- For example, Profit Margin Percentage cannot be directly summed across products or time.

- Other examples include Customer Satisfaction Rating and Discount Percentage.

Measures: Their Categorization and Computation

Measures in a data warehouse are categorized based on how they can be computed and aggregated. The three main types of measures are:

- Distributive Measures: Can be computed in parts and then combined.

- Algebraic Measures: Can be computed using a formula involving distributive measures.

- Holistic Measures: Require the entire dataset for computation and cannot be divided into smaller parts.

Distributive Measures

- A measure is distributive if it can be computed for subparts of the data and then combined to get the final result.

- For example, COUNT, SUM, and MIN/MAX are distributive because they can be calculated in parts and merged.

- Example: The total number of customers in different regions can be counted separately and then summed up for the overall count.

Algebraic Measures

- A measure is algebraic if it can be computed using a formula that involves distributive measures.

- For example, Average (AVG) = SUM / COUNT is an algebraic measure because it uses distributive measures (SUM and COUNT).

- Other examples include Rate, Percentage, and Weighted Average.

Holistic Measures

- A measure is holistic if it requires all the data for computation and cannot be divided into smaller parts.

- For example, Median, Mode, and Percentiles require the entire dataset to be analyzed.

- These measures are often used in complex statistical analysis and cannot be aggregated like distributive or algebraic measures.