Fuzzy Variables

-

Definition of Fuzzy Variables:

A fuzzy variable is a variable that can take on values expressed as linguistic terms rather than precise numerical values. These linguistic terms are represented by fuzzy sets.

Example: Temperature as a fuzzy variable. Linguistic terms: {Cold, Warm, Hot} Fuzzy sets: - Cold: µ(x) = {1 at 0°C, 0.8 at 10°C, 0 at 20°C} - Warm: µ(x) = {0 at 10°C, 0.5 at 20°C, 1 at 25°C, 0.2 at 30°C} - Hot: µ(x) = {0 at 25°C, 0.6 at 30°C, 1 at 40°C} -

Representation using Linguistic Terms:

Fuzzy variables are commonly represented using linguistic terms that correspond to fuzzy sets. These terms capture the imprecision of real-world scenarios.

-

Example: Speed as a fuzzy variable:

Linguistic terms: {Slow, Moderate, Fast}

Fuzzy sets:Slow: µ(x) = {1 at 0 km/h, 0.7 at 20 km/h, 0 at 50 km/h} Moderate: µ(x) = {0 at 20 km/h, 0.5 at 50 km/h, 1 at 60 km/h, 0.3 at 80 km/h} Fast: µ(x) = {0 at 60 km/h, 0.8 at 100 km/h, 1 at 120 km/h}

-

Example: Speed as a fuzzy variable:

-

Importance of Fuzzy Variables:

Fuzzy variables are essential in modeling and solving problems where exact measurements are difficult or impossible to obtain, especially in scenarios involving ambiguity or vagueness.

-

Applications:

-

Weather Forecasting:

Fuzzy variables like "Cloudiness" and "Wind Strength" help in predicting weather conditions without requiring precise values. -

Control Systems:

Fuzzy variables such as "Speed" and "Distance" are used in fuzzy logic controllers, e.g., for cruise control in cars. -

Decision Making:

Fuzzy variables like "Risk Level" and "Profitability" assist in business and financial decision-making under uncertainty.

-

Weather Forecasting:

-

Applications:

Fuzzy Rule / Fuzzy Implication / Fuzzy If-Then Rule

- A fuzzy implication (also called a fuzzy if-then rule or fuzzy conditional statement) is a rule used in fuzzy logic systems to describe relationships between fuzzy sets in natural language terms. These rules are the foundation of fuzzy inference systems, where decisions are made based on fuzzy conditions and their outcomes.

-

The basic structure of a fuzzy rule can take the following forms:

- If x is A, then y is B.

- If x is A, then y is B; else y is C.

-

Explanation of Forms:

-

Form 1: If x is A, then y is B

This means that when the value of x belongs to the fuzzy set A (to some degree), the value of y is associated with the fuzzy set B. The strength of the relationship between x and y depends on their respective membership values.-

Example:

- If a mango is yellow, then the mango is sweet.

- Here:

- x = mango color.

- A = "yellow" (a fuzzy set with values between 0 and 1).

- y = mango taste.

- B = "sweet" (a fuzzy set with values between 0 and 1).

- If a mango is 70% yellow (membership = 0.7), it is inferred to be 70% sweet.

-

Example:

-

Form 2: If x is A, then y is B; else y is C

This means that if x does not belong to the fuzzy set A (or belongs weakly), y will instead belong to a different fuzzy set C. This adds an alternative outcome when the primary condition is not satisfied.-

Example:

- If a mango is yellow, then the mango is sweet; else, it is sour.

- Here:

- x = mango color.

- A = "yellow".

- y = mango taste.

- B = "sweet".

- C = "sour".

- If a mango is 50% yellow (membership = 0.5), it is inferred to be 50% sweet and not sour.

- If a mango is not yellow (membership = 0), it is fully sour (membership = 1 for sour).

-

Example:

-

Form 1: If x is A, then y is B

-

Applications of Fuzzy Rules:

- Controlling appliances: If the room is hot, then reduce fan speed.

- Weather prediction: If the sky is cloudy, then there is a high chance of rain.

- Decision-making: If the workload is high, then assign more resources; else, reduce resources.

-

If x is A, then y is B:

The implication relation R can be written as: R = (A × B) ∪ (Ac × Y) -

If x is A, then y is B; else y is C:

The implication relation R can be written as: R = (A × B) ∪ (Ac × C)

Question:

Let \( X = \{a, b, c, d\} \), \( Y = \{1, 2, 3, 4\} \) and

\( A = \{(a, 0), (b, 0.8), (c, 0.6), (d, 1)\} \)

\( B = \{(1, 0.2), (2, 1), (3, 0.8), (4, 0)\} \)

\( C = \{(1, 0), (2, 0.4), (3, 1), (4, 0.8)\} \)

Here, \( X \) and \( Y \) are sets, and \( A \), \( B \), \( C \) are fuzzy sets.

Determine the implication relations:

Solution:

If \( X \) is \( A \), then \( Y \) is \( B \):

The implication relation is defined as: \[ R = (A \times B) \cup (A^c \times Y) \]

Step 1: Cartesian Product \( A \times B \)

For \( A \times B \), we calculate the minimum of the membership values of \( A \) and \( B \) for each pair:

\[ A \times B = \begin{pmatrix} \min(0, 0.2) & \min(0, 1) & \min(0, 0.8) & \min(0, 0) \\ \min(0.8, 0.2) & \min(0.8, 1) & \min(0.8, 0.8) & \min(0.8, 0) \\ \min(0.6, 0.2) & \min(0.6, 1) & \min(0.6, 0.8) & \min(0.6, 0) \\ \min(1, 0.2) & \min(1, 1) & \min(1, 0.8) & \min(1, 0) \end{pmatrix} \]

Which simplifies to:

\[ A \times B = \begin{pmatrix} 0 & 0 & 0 & 0 \\ 0.2 & 0.8 & 0.8 & 0 \\ 0.2 & 0.6 & 0.6 & 0 \\ 0.2 & 1 & 0.8 & 0 \end{pmatrix} \]

Step 2: Complement of \( A \), \( A^c \)

The complement \( A^c \) is computed by subtracting each membership value in \( A \) from 1:

\[ A^c = \{(a, 1), (b, 0.2), (c, 0.4), (d, 0)\} \]

Step 3: Cartesian Product \( A^c \times Y \)

For \( A^c \times Y \), we pair each element of \( A^c \) with each element of \( Y \) and compute the minimum of their membership values. Since \( Y = \{(1, 1), (2, 1), (3, 1), (4, 1)\} \), each element in \( Y \) has the membership value of 1:

\[ A^c \times Y = \begin{pmatrix} \min(1, 1) & \min(1, 1) & \min(1, 1) & \min(1, 1) \\ \min(0.2, 1) & \min(0.2, 1) & \min(0.2, 1) & \min(0.2, 1) \\ \min(0.4, 1) & \min(0.4, 1) & \min(0.4, 1) & \min(0.4, 1) \\ \min(0, 1) & \min(0, 1) & \min(0, 1) & \min(0, 1) \end{pmatrix} \]

Which simplifies to:

\[ A^c \times Y = \begin{pmatrix} 1 & 1 & 1 & 1 \\ 0.2 & 0.2 & 0.2 & 0.2 \\ 0.4 & 0.4 & 0.4 & 0.4 \\ 0 & 0 & 0 & 0 \end{pmatrix} \]

Step 4: Union of \( A \times B \) and \( A^c \times Y \)

We now compute the union of \( A \times B \) and \( A^c \times Y \) by taking the element-wise maximum of the two matrices:

\[ R = (A \times B) \cup (A^c \times Y) = \begin{pmatrix} \max(0, 1) & \max(0, 1) & \max(0, 1) & \max(0, 1) \\ \max(0.2, 0.2) & \max(0.8, 0.2) & \max(0.8, 0.2) & \max(0, 0.2) \\ \max(0.2, 0.4) & \max(0.6, 0.4) & \max(0.6, 0.4) & \max(0, 0.4) \\ \max(0.2, 0) & \max(1, 0) & \max(0.8, 0) & \max(0, 0) \end{pmatrix} \]

Which simplifies to:

\[ R = \begin{pmatrix} 1 & 1 & 1 & 1 \\ 0.2 & 0.8 & 0.8 & 0.2 \\ 0.4 & 0.6 & 0.6 & 0.4 \\ 0.2 & 1 & 0.8 & 0 \end{pmatrix} \]

Final Answer:

\[ R = \begin{pmatrix} 1 & 1 & 1 & 1 \\ 0.2 & 0.8 & 0.8 & 0.2 \\ 0.4 & 0.6 & 0.6 & 0.4 \\ 0.2 & 1 & 0.8 & 0 \end{pmatrix} \]

2. If X is A, then Y is B, else Y is C:

For this case, we need to compute \( R = (A \times B) \cup (A^c \times C) \), similar to the previous calculation. However, this step also includes the condition for "else Y is C" when \( X \) is not in \( A \).

Step 1: Complement of A (\(A^c\)) is the same as before:

\[ A^c = \{(a, 1), (b, 0.2), (c, 0.4), (d, 0)\} \]

Step 2: Compute \( A^c \times C \) (same as before):

\[ A^c \times C = \begin{pmatrix} 0 & 0.4 & 1 & 0.8 \\ 0 & 0.2 & 0.2 & 0.2 \\ 0 & 0.4 & 0.4 & 0.4 \\ 0 & 0 & 0 & 0 \end{pmatrix} \]

Step 3: Union of \( (A \times B) \) and \( (A^c \times C) \) (same as before):

R = (A × B) ∪ (Ac × C) = \begin{pmatrix} 0 & 0.4 & 1 & 0.8 \\ 0.2 & 0.8 & 0.8 & 0.2 \\ 0.2 & 0.6 & 0.6 & 0.4 \\ 0.2 & 1 & 0.8 & 0 \end{pmatrix}

This is the final result for the implication relation where "If X is A, then Y is B, else Y is C.

Membership Function

- What is it? A membership function is a function that specifies the degree or level to which a given input belongs to a set.

- Degree of Membership: It specifies the extent to which a particular input belongs to a set. The value of the degree of membership is always between 0 and 1. This value is also called the membership value of an element \( x \) in the set \( A \).

-

Understanding with an Example:

Let's say we have a set \( A \) and an element \( x \) is a member of this set.

- If the membership value is \( 0.6 \), it means that \( x \) partially belongs to set \( A \) to a degree of 0.6.

- If the membership value is \( 0.9 \), it means \( x \) belongs to set \( A \) more strongly (to a higher degree).

- If the membership value is \( 1 \), it means \( x \) fully belongs to set \( A \).

- If the membership value is \( 0 \), it means \( x \) does not belong to set \( A \) at all.

-

Mathematical Definition:

A fuzzy set \( A \) of a universe \( X \) is defined by the membership function \( \mu_A(x) \),

which represents the degree of membership of \( x \) in set \( A \).

- For example, to determine the membership function for an element \( x \) in set \( A \), it is represented as \( \mu_A(x) \).

-

Example:

- \( \mu_A(x) = [x, 0.3] \): This means \( x \) belongs to set \( A \) with a degree of 0.3, i.e., it partially belongs to \( A \).

- The membership function \( \mu_A(x) : X \to [0, 1] \) indicates that the membership value must be within the range [0, 1].

- \( \mu_A(x) = 1 \): \( x \) fully belongs to \( A \).

- \( \mu_A(x) = 0 \): \( x \) does not belong to \( A \).

- \( 0 < \mu_A(x) < 1 \): \( x \) partially belongs to \( A \).

- Applications: Membership functions are widely used in the fuzzification and defuzzification processes in fuzzy logic systems. They help in converting crisp inputs into fuzzy values and vice versa.

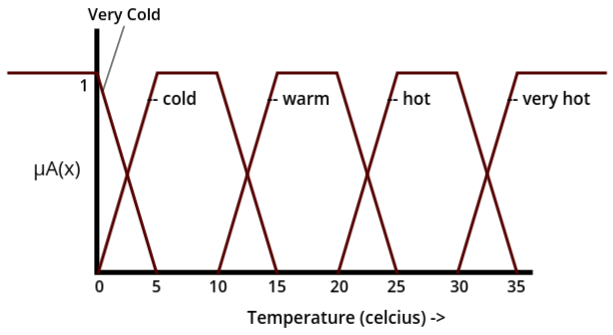

Graphical Representation of Membership Function

- The membership function represents different temperature categories: [very cold, cold, warm, hot, very hot].

-

Let’s understand how the graph works:

- When the temperature is below 0 degrees Celsius, it fully belongs to the "very cold" category because the membership function (μA(x)) value is 1. This means the temperature is completely categorized as "very cold."

- As the temperature increases from 0 to 5 degrees Celsius, the μA(x) value for "very cold" gradually decreases. By the time it reaches 5 degrees Celsius, the μA(x) value for "very cold" becomes 0. This indicates that the temperature no longer belongs to the "very cold" category.

- During the same range (0 to 5 degrees Celsius), the μA(x) value for "cold" starts increasing. For example, it might go from 0.1 to 0.2 to 0.3, and so on. At 5 degrees Celsius, the μA(x) value for "cold" becomes 1, meaning the temperature fully belongs to the "cold" category.

- From 5 to 10 degrees Celsius, the temperature remains fully in the "cold" category, as indicated by the μA(x) value of 1. A similar pattern is shown for other categories like "warm," "hot," and "very hot" in the graph.

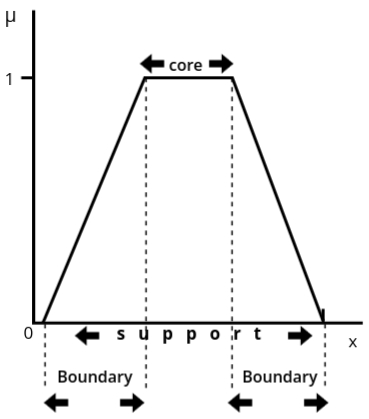

Membership Function Features

A is a fuzzy set, and x is a member of A. These features of the membership function can be understood by plotting them on a graph:

- Support: The support of a fuzzy set is the set of all elements x for which the

membership value μA(x) is greater than 0.

Meaning: It represents all points where the fuzzy set has some degree of membership, no matter how small.

Mathematical Representation:

Support(A) = {x | μA(x) > 0}

Example: If μA(x) > 0 for elements x = 1, 2, 3, then the support of the set A is {1, 2, 3}. - Core: The core of a fuzzy set is the set of all elements x for which the membership

value μA(x) is exactly equal to 1.

Meaning: It represents the points that fully belong to the fuzzy set with the maximum membership value.

Mathematical Representation:

Core(A) = {x | μA(x) = 1}

Example: If μA(x) = 1 for elements x = 5 and x = 6, then the core of the set A is {5, 6}. - Boundary: The boundary of a fuzzy set consists of all elements x where the

membership value is strictly between 0 and 1.

Meaning: It represents the elements that belong to the fuzzy set partially (neither fully included nor completely excluded).

Mathematical Representation:

Boundary(A) = {x | 0 < μA(x) < 1}

Example: If μA(x) = 0.4 for x = 4 and μA(x) = 0.8 for x = 7, then the boundary of the set A includes {4, 7}.

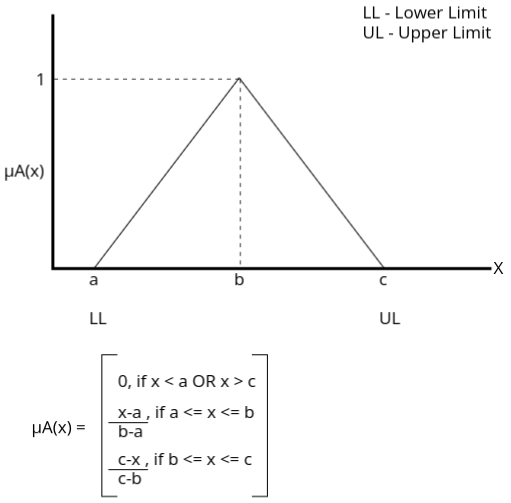

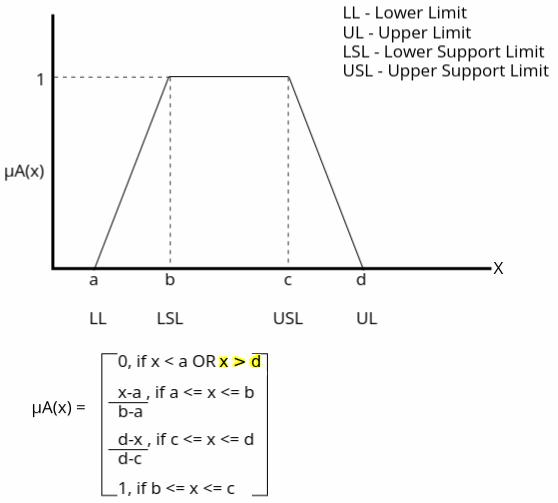

Types of Membership Function

1: Triangular Membership Function

Importance: Simple and computationally efficient, making it ideal for real-time systems. It is widely used due to its linear structure and ease of implementation.

2: Trapezoidal Membership Function

Importance: Extends the triangular membership function by allowing a flat top, providing more flexibility for modeling uncertainties and overlapping ranges in fuzzy systems.

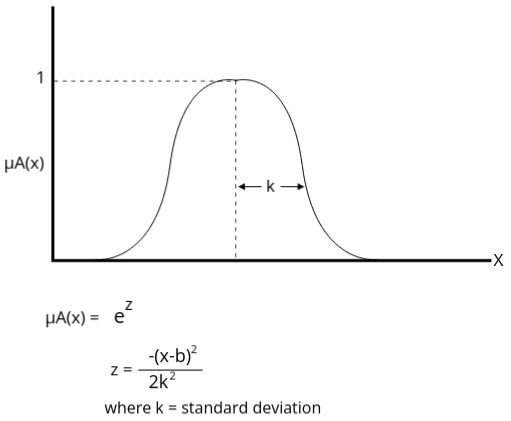

3: Gaussian Membership Function

Importance: Smooth and differentiable, making it suitable for applications requiring gradient-based optimization. It is commonly used in control systems and fuzzy inference systems.

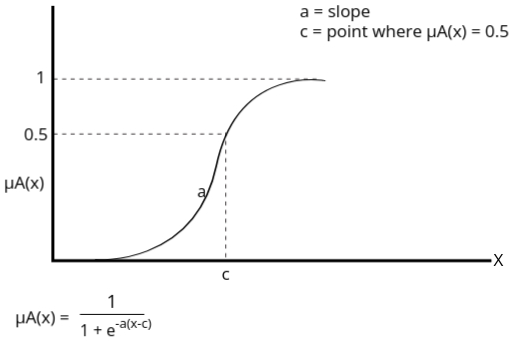

4: Sigmoidal Membership Function

This function is mainly used in ANN.

Importance: Offers a smooth transition between membership grades and is particularly useful in artificial neural networks (ANNs) and systems requiring smooth activation functions.

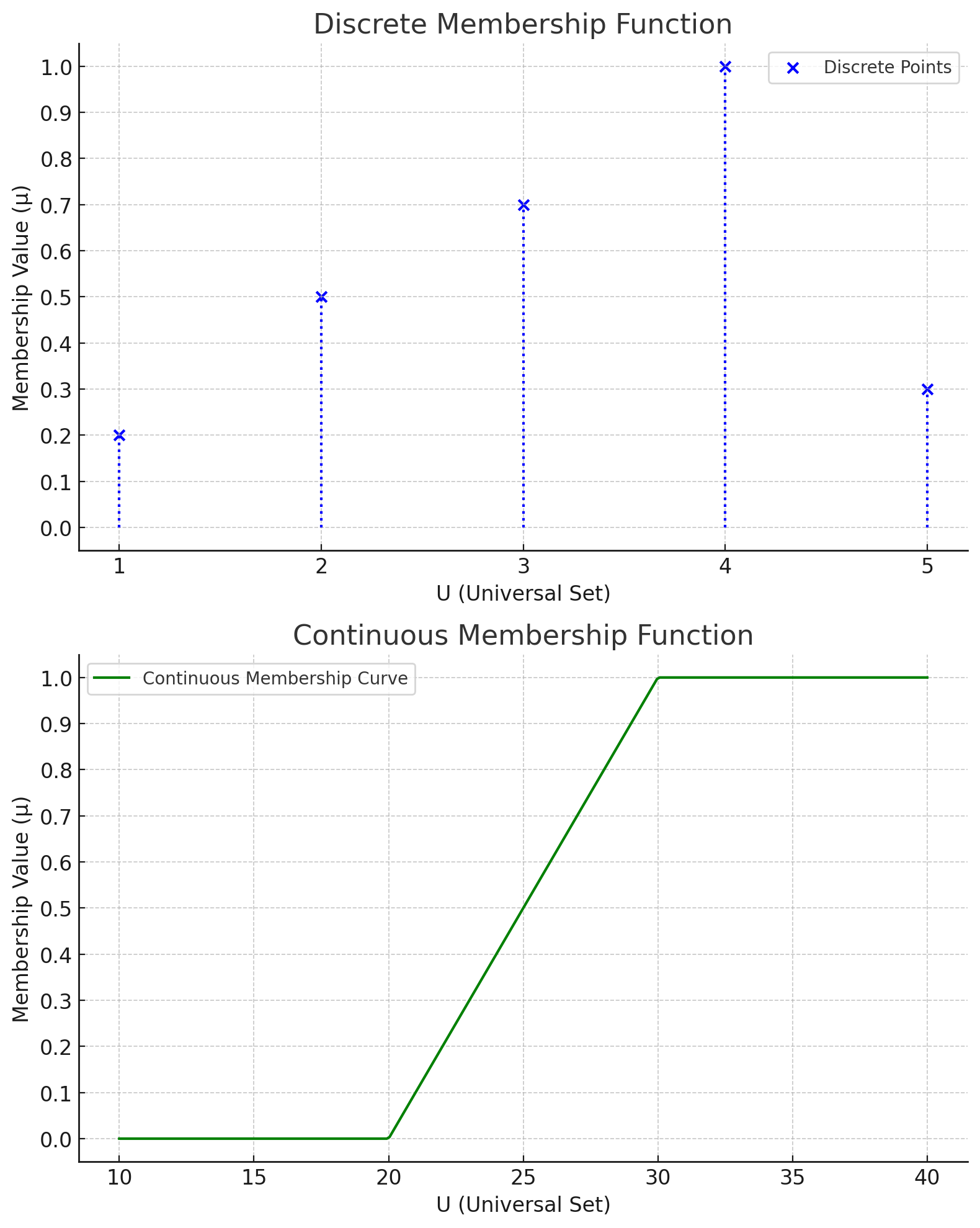

Discrete and Continuous Membership Functions

Discrete Membership

- In a discrete membership function, the membership values are defined only at specific, distinct points in the universal set.

- These points are not continuous and are often represented as a series of individual data points.

- Example: Let U = {1, 2, 3, 4, 5} and the fuzzy set A is defined as:

A = {(1, 0.2), (2, 0.5), (3, 0.7), (4, 1.0), (5, 0.3)}.

Here, the membership function μA(x) is only defined for these discrete points: 1, 2, 3, 4, and 5. - Such membership functions are represented graphically as isolated points, often marked with dots or vertical lines.

Continuous Membership

- In a continuous membership function, the membership values are defined over a continuous range of points in the universal set.

- The membership function is expressed as a smooth curve rather than isolated points.

- Example: Let U represent the range of temperatures in degrees Celsius. The

fuzzy set "Warm" may be defined by:

μWarm(x) =- 0, if x < 20

- (x - 20) / 10, if 20 ≤ x ≤ 30

- 1, if x > 30

This membership function defines a smooth transition of values from 0 to 1 as the temperature increases. - Graphically, a continuous membership function appears as a curve, indicating gradual changes in membership value.

Fuzzy Inference System

A Fuzzy Inference System (FIS) is a framework for reasoning and decision-making under uncertainty using fuzzy logic. It processes input variables and generates outputs based on a set of fuzzy rules. FIS is widely used in control systems, pattern recognition, and decision-making applications. It bridges the gap between human-like reasoning and computational systems by working with approximate information rather than precise data.

Fuzzy Inference Systems are applied in a wide range of fields to handle uncertain or imprecise data. Some real-life applications include:

- Automatic Climate Control: FIS is used in air conditioning systems to adjust temperature and humidity levels based on user preferences and environmental conditions.

- Washing Machines: Modern washing machines use FIS to determine the optimal washing cycle based on load size, fabric type, and dirt level.

- Automotive Systems: In cars, FIS is applied for functions such as anti-lock braking systems (ABS), gear shifting, and adaptive cruise control.

- Healthcare: FIS helps in diagnosis support systems, where patient symptoms are analyzed to suggest possible conditions and treatments.

- Stock Market Analysis: FIS models are employed to analyze trends and make predictions in stock trading under uncertain market conditions.

- Robotics: Robots use FIS for navigation and decision-making in uncertain environments.

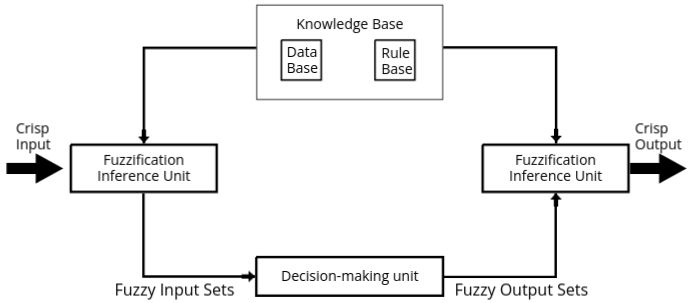

Functional Blocks of FIS

The following five functional blocks are essential for constructing a FIS:

- Rule Base: It contains a collection of fuzzy if-then rules that define the relationship between input and output variables.

- Database: It defines the membership functions of fuzzy sets used in the fuzzy rules, providing the necessary information for fuzzification and inference.

- Decision-Making Unit: This unit applies the fuzzy rules to the input data to derive fuzzy outputs. It combines the results of all applicable rules.

- Fuzzification Interface Unit: It converts crisp (precise) input values into fuzzy sets using membership functions. This step is crucial for handling imprecise or uncertain data.

- Defuzzification Interface Unit: It converts the fuzzy output of the inference process into crisp values for actionable results, making the system usable in real-world scenarios.

Understanding Fuzzification and Defuzzification

- Fuzzification is the process of converting crisp (precise and numerical) input values into fuzzy sets using predefined membership functions. This step allows a system to handle imprecise, uncertain, or vague data by mapping input values to linguistic terms (e.g., "low," "medium," "high") represented as fuzzy sets.

- Defuzzification is the process of converting fuzzy outputs generated by the inference engine into crisp (precise and numerical) values. It translates the fuzzy results into actionable outputs by applying methods like the centroid of area, bisector, or mean of maxima, making the output usable in real-world scenarios.

Block Diagram of FIS

The block diagram of a Fuzzy Inference System (FIS) illustrates the flow of data through its functional components. The diagram typically consists of the following parts:

- Input: Crisp input values are provided to the system.

- Fuzzification: Converts crisp inputs into fuzzy sets using membership functions.

- Inference Engine: Processes fuzzy inputs using the rule base and database to generate fuzzy outputs.

- Defuzzification: Converts fuzzy outputs into crisp values suitable for real-world use.

- Output: The final crisp output is delivered, which can be used in decision-making or control applications.

Working of FIS

The working of the Fuzzy Inference System (FIS) consists of the following steps:

- Fuzzification: The fuzzification unit applies various fuzzification methods to convert crisp input values into fuzzy inputs using membership functions.

- Knowledge Base: A knowledge base, which includes both the rule base (fuzzy if-then rules) and the database (membership functions), is used to process the fuzzy inputs.

- Inference Mechanism: The inference engine applies the fuzzy rules to the fuzzy inputs to generate fuzzy outputs. This step combines all applicable rules and determines the degree to which each output is activated.

- Defuzzification: The defuzzification unit converts the fuzzy output into a crisp output, making it usable for decision-making or control applications in real-world scenarios.

Methods of FIS

The following are the two important methods of Fuzzy Inference Systems, differing in the way they define the consequent of fuzzy rules:

- Mamdani Fuzzy Inference System: A widely used approach that represents the consequent of fuzzy rules as fuzzy sets. It uses a max-min (or max-product) composition method and requires defuzzification to produce a crisp output.

- Takagi-Sugeno Fuzzy Model (TS Model): This approach represents the consequent of fuzzy rules as a mathematical function (e.g., linear or constant). It simplifies the defuzzification process and is suitable for control applications requiring precise outputs.

Mamdani Fuzzy Inference System

The Mamdani Fuzzy Inference System is one of the earliest and most popular FIS methods. It is characterized by its use of fuzzy sets for both the antecedent and consequent of rules. The steps involved include:

- Fuzzification: Converting crisp inputs into fuzzy sets using membership functions.

- Rule Evaluation: Applying fuzzy rules and combining the results using fuzzy operators (e.g., AND, OR).

- Aggregation: Combining the outputs of all fuzzy rules into a single fuzzy set.

- Defuzzification: Converting the aggregated fuzzy set into a crisp output using methods like the centroid or bisector method.

Takagi-Sugeno Fuzzy Model

The Takagi-Sugeno Fuzzy Model is designed for precise and computationally efficient outputs. It differs from the Mamdani method in the following ways:

- Consequent Representation: The consequent of rules is represented as a linear or constant mathematical function instead of a fuzzy set.

- Rule Evaluation: The rules are evaluated similarly to Mamdani, but the output is directly calculated using the functions defined in the consequents.

- Defuzzification: The weighted average method is typically used, making the defuzzification process straightforward and fast.

This model is commonly used in engineering and control systems due to its efficiency and ability to handle large-scale problems.